Kids are back to school this week. Related: The end of the briefing has some data on how majors have changed over the last few years, and how young people feel about their degrees after they graduate (regrets, they have a few). Somewhat related, I’ve been asked by some of you for a more detailed write-up on "Boundless Life". So expect that in the next month or two. I have advertisers for the next five weeks, so that is an external commitment for content every week for at least the next month. This week was a big week for AI (see below) and some interesting news in streaming television. Enjoy.

I mentioned before that I will eventually be moving the newsletter to consolidate it with my Marketing BS newsletter. I have not put in the time to actually make that transition happen, and as a result you have been short-changed when I have prioritized getting the other newsletter out, and this one sometimes misses a week or two. Have no fear. The transition will happen -- exactly the same content (slightly prettier), but from a new IP address. Stay tuned. Nothing for you to do, but to be aware.

This Week's Sponsor:

Your Personal Design Team to Get Things Done

Looking for design talent to create landing pages, display ads, decks, print pieces, graphics, or UX/UI designs? Check out Catalog. For a flat monthly fee (with no commitments), you get unlimited design services with your own design director, project manager, and top designers. See designs in 2–4 days depending on complexity and make as many revision requests as you need. Plus, get $500 off when you mention this newsletter!

Marketing

Connected TV: Pete Harrison wrote a short post on how to best test into television in this new changing streaming landscape. His conclusions:

Test in connected TV BEFORE linear. Expect your final budget to be ~75% CTV/25% linear

Test at low CPMs in the $5-$15 range before going higher

Run lots of test cells to see what works (like the old days on Facebook)

Test on lots of different devices — don’t avoid computers and mobile

Set up your tracking to get REAL sales impact and not “survey data”

Bundles: In the wake of Discovery moving to bundle their filler-content with HBO, Paramount is bundling Paramount+ and Showtime. Until now the two brands were only available in their own apps. Now they are both in Paramount+ and you can subscribe to both services for $7.99 ($12.99 for no ads) for a limited time. Note that Showtime stand-alone is priced at $10.99. Related: Walmart is bundling Paramount+ into their version of Amazon Prime. Mike Shields has a good take on how important Walmart is becoming as player in the ad space.

Netflix: The streaming service has announced that their ad units will be “premium priced”, with estimates of around $65 CPMs. I have a hard time understanding how they expect to get away with prices like that. They can argue they have a “premium audience”, but only the most price sensitive of their audience is going to opt-into the ad supported tier. I expect it will only be unsophisticated marketers of large brands looking for whatever incremental frequency they can get who will pay those prices.

Apple: Austin Allred points out that Apple’s latest privacy and advertising moves can be thought of as brilliant marketing and public relations. Marketing is Everything was one of my first posts when I launched this Substack.

Amazon Cloud: The Information ($) claims that Amazon will soon announce the ability to better track advertising performance by using a “data clean room”, where AWS “Bastion” (the new service name) will pool ad performance across many companies and share the results back without breaking any of the “third party” ad tracking rules that Apple has demanded. Aggregators going to aggregate.

Arbitrage: Alex Tabarrok explains how universities may be able to game the latest changes to education financing. Schools could double tuition, and the provide cash-back incentives to students to cover payments on the loans (which are capped with the new program). Interesting for marketers for the the parallels to loyalty programs. Could airlines and hotels go even further in their programs, say doubling prices, but then giving back hundreds of dollars in points with every stay? Find ways where it is relatively cost-neutral for consumers, but a great deal for corporate travelers who’s company pays the list price and they personally profit on the kick-backs.

TikTok: Case study on a temporary tattoo company that switched the majority of their marketing spend to TikTok. Seems like the strategy is to pay influencers and track individual content effectiveness (in terms of revenue per view? Unclear.), and then use the TikTok ad network to get more views on the most effective content. I’m a little skeptical, but finding SOMETHING to work on TikTok should be a priority for many marketers - the channel is getting too big to ignore. (My personal best bet is to use TikTok to drive traffic and then re-target in other media against those specific users. But I have not seen tests on that run in practice yet).

Marketing to Employees

Marketing to Franchisees: Radio Shack recent tweets have been very NSFW. I have argued doing things like this that are offensive to some customers is a smart play as long as it does not offend your employees. Customers really don’t care about your company, so if you can get people talking about you, even if its in a controversial way, you usually end up ahead. Unfortunately it is not just employees that matter — Some Radio Shack dealers are upset and shutting down their store-within-stores. Maybe I should rephrase “Marketing to Employees” to “Marketing to non-consumers”. Not as catchy.

Employee Heterogeneity:

AI

Stable Diffusion: Last week an AI-tools company called “HuggingFace” (think of them as Github for AI) in partnership with a few other AI organizations released “Stable Diffusion” — an open sourced model for text-to-image creation. Unlike Dall-e2, which released access to their model, HuggingFace has released the ENTIRE model - which means anyone can now run a full text-to-image AIon their own server. This means a few things:

There are no more guardrails. OpenAI and other providers of text-to-image AIs created many limits and restrictions on how the software could be used. When its code running on your own server you can do whatever you want (within the laws of the country you are operating)

There are many companies that jumped up to provide front-end access to Stable Diffusion, including HuggingFace itself (free demo here), and Dreamstudio (see next link)

In addition to text-to-image StableDiffusion allows image+text-to-image, so you can create a rough sketch and then have the AI build off it to create a brand new image in whatever style you select

Dreamstudio: A very front-end tool for getting access to Stable Diffusion. Dreamstudio is available to anyone (in beta) and creates a single image, but in my experience the quality is much better than MidJourney and it is FAST. I let my 7-year old play with it and this was her favorite — she wanted to create a cover for her personal journal:

What is an artist?: Big news last week was that the winner of a digital art competition admitted to using MidJourney (a Dall-e competitor). Artists are angry and believe it was cheating. This is the modern version of 19th century painters claiming photography was not art, and 1980s photographers claiming using photoshop was cheating. Art will find a way. Related: One Bookshelf, an ecommerce company in the indiRPG space (DriveThruRPG is their largest brand) has announced that going forward all products sold on their site need to identify when artwork is created with AU-tools. Unclear how this could ever be enforced.

Pricing: OpenAI has dropped its pricing of GPT-3. The most powerful model (Davinci) has dropped from $0.06/1000 tokens to $0.02/1000 tokens. The cost to translate Harry Potter into English to Spanish has dropped from about $12 to $4. It’s Moores Law all over again, this time with AI tools. From the team: “We have been looking forward to this change for a long time. Our teams have made incredible progress in making our models more efficient to run, which has reduced the cost it takes to serve them.”

Accent “Correction”: Using AI to change Indian accents into American accents in real time. Commercialized.

Textual Inversion: Researchers are working on the ability to use Dall-e type textual models to modify existing images rather than create from text alone. We are just at the start of what these models will be capable of. Expect photoshop-type tools of many different variations — all of which will allow for the creation of incredibly beautiful images. Aesthetics are about to get a lot better. Our grandkids are going to wonder how we functioned without these tools.

Panoptic scene graph generation: Louis Bouchard points out that this is one of the things image-identification are still very bad at. AI can now identify images, classify them, and segment where in a image different object appear, but current models still struggle with understanding the relationship between objects and what is actually happening in a picture. This is why Dall-e will often get confused if there are more than one subject with different adjectives in a request. It “knows” that the human will ride the horse, but if you tell it that a shark in a suit is riding a red squirrel it gets confused and you get images like this (created with Dream Studio):

New York Times: AI’s rapid advances is finally getting into the mainstream press. Last month it was the Economist, now the NYTs says that, “We Need to Talk About How Good A.I. Is Getting”. You won’t get the cutting edge news from the NYTs, but you can see where the conversation is going.

AI-Human collaboration: Tyler Cowen at Marginal Revolution recommends “Working with AI” for real world examples of human-AI collaboration. I have added to my list.

AI Film: The first film created entirely with AI. “Tiger goes to the city”. It is a silent film, and looks like something out of the 1920s, but as I keep saying, the technology is getting better very quickly.

Implications: Elad Gil summarized his take on where these transformer-model AI tools are going. Among other things (worth reading the whole thing), he sees three types of companies coming from the new tools:

Platforms: The companies that build the AI models like OpenAI, Google, Facebook, Microsoft, etc. Other companies and individuals will pay to have access

Stand-alone: Companies that put wrappers on the tech to solve for specific solutions like writing marketing copy, hosting chatbots, automated email tools, internal knowledge systems, etc. Think of this like how Uber became possible because of the smartphone

Tech-enabled Incumbents: Every tech company will incorporate the transformer models to enhance their products. Word processors will allow you to click a button to “expand on your text”, powerpoint and photoshop will allow you to create any image you want just by describing it, Salesforce will have many new automation tools for managing your customers.

Careers

Learning to Code: Freddie deBoer argues that coding pays well only because of high demand and low supply, and that as more people switch their majors to computer science, they will be disappointed with the results. He is partially right — supply and demand clearly matter. But it is not the only reason someone is paid well. Employees that create a lot of value are paid well, and being able to code allows you to create value. There are limits to this — eventually there will be no valuable projects for a coder to develop — but I don’t see that happening in our lifetime. Supply and demand still exists, but “demand” is not something magical, it depends on the ability of the supply to create value. All this traces back to viral twitter thread from Benjamin Schmidt showing changes in undergraduate majors over time (among other things):

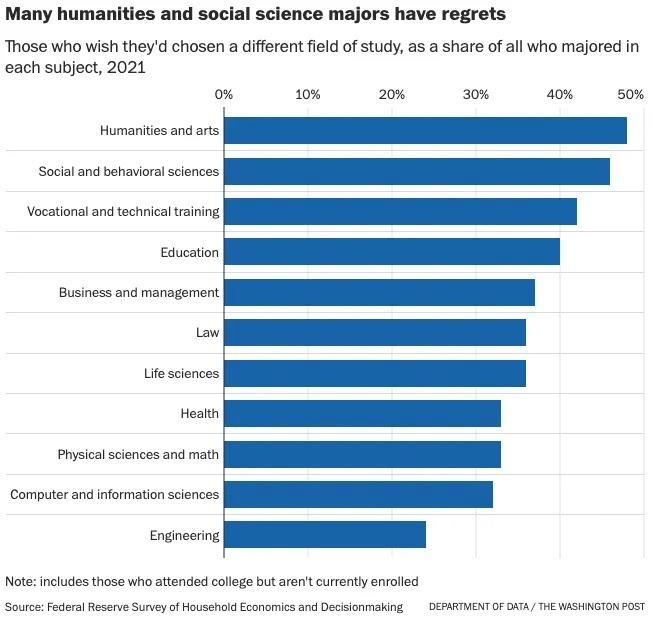

Regrets: Related to the above, 40% of American college graduates regret their majors, and it varies just the way you would expect:

Remote Effectiveness: I was in Ottawa Canada last week where everyone was talking about the federal government forcing all employees to be back in the office 2-days per week. Most people I spoke to thought it was ridiculous and that they were more productive working from home. I think that is still true if you are doing “non-innovative work”, but it gets less true the more collaboration and hard thinking is required. This paper (h/t MR) shows that chess players perform objectively worse when playing remotely. At most jobs we cannot measure effectiveness with anywhere neat this degree of precision, but I would not be surprised if the results were the same.

Keep it Simple,

Edward